As We May Think Sessions - Cyclops Camera

Project Overview

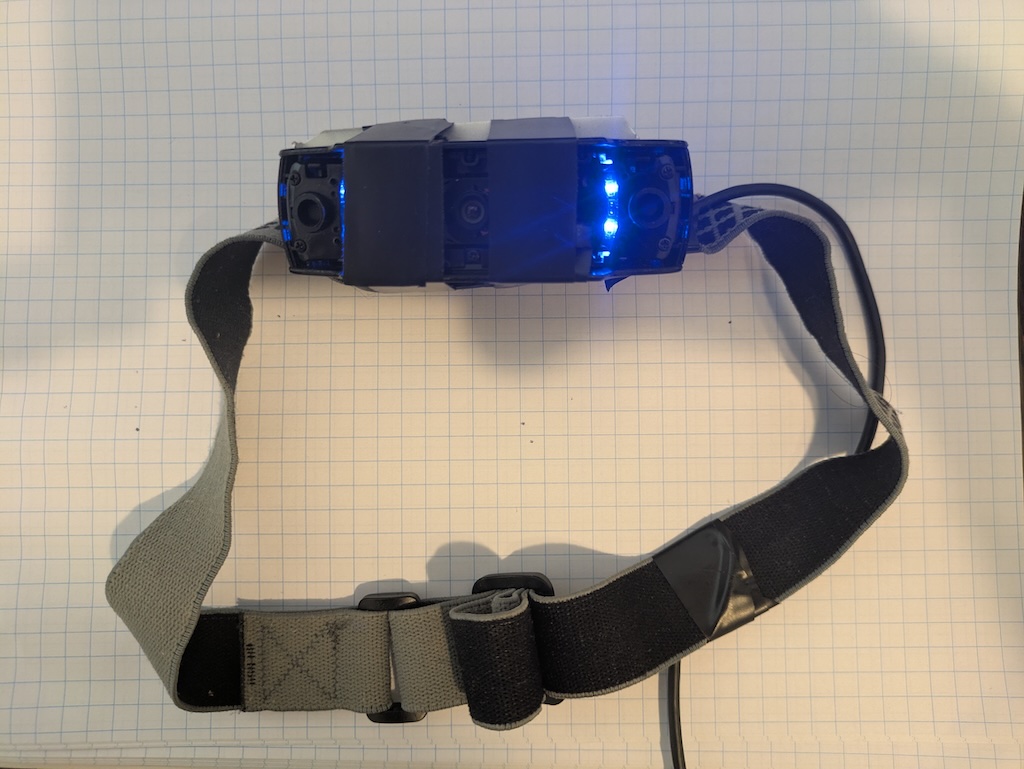

Inspired by Vannevar Bush's "As We May Think" article and the new capabilities of real-time multimodal models, this project involved mounting a Logitech C920 camera on a headlamp strap to create a "Cyclops camera" for collaborative human-machine reading experiences.

Key Innovations

• Head-mounted camera providing first-person perspective to AI

• Real-time bidirectional dialogue with very low latency

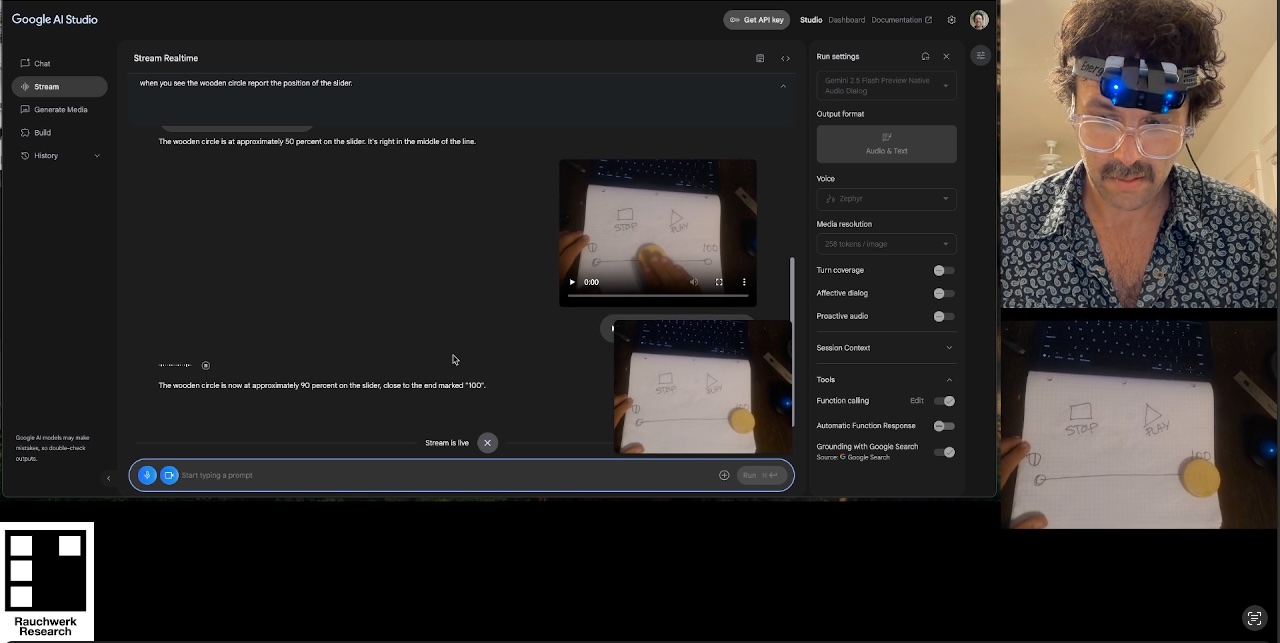

• Interactive tangible interfaces (wooden slider with graph paper)

• True real-time response - AI responds before actions complete

• Zero-trigger interaction model

Technical Implementation

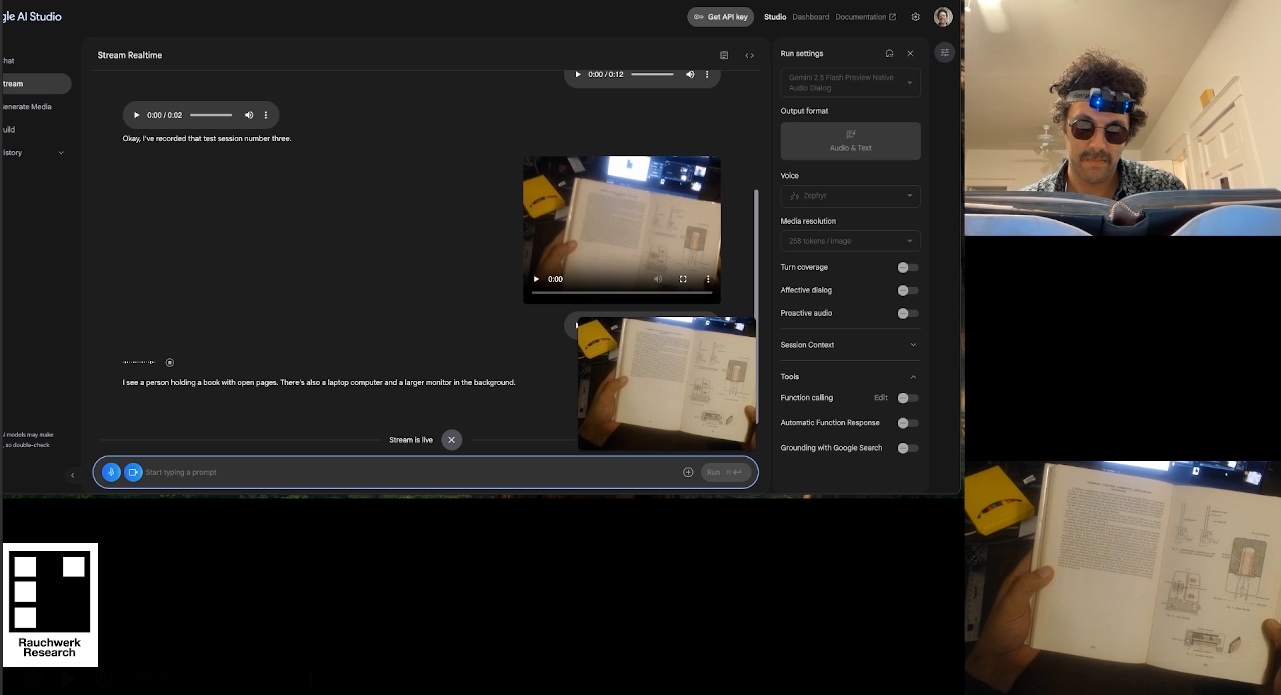

The system used a modified Logitech C920 camera attached to an Energizer headlamp strap, connected to Google AI Studio running Gemini 2.5 Pro with native audio. The setup enabled real-time visual processing and conversation, allowing the AI to see exactly what the user sees and respond immediately.

Experimental Results

Successfully demonstrated real-time multimodal interaction including:

• Reading and discussing "How Things Work" book in real-time

• Interactive control of a constructed slider interface

• Button pressing and real-time feedback

• Pre-emptive AI responses to incomplete actions

Future Vision

This prototype opens discussions about using multimodal streaming large language models for fundamentally new types of interfaces - more convivial interactions that go beyond traditional "fingers on glass" paradigms. The work suggests opportunities for reimagining human-computer interaction through frontier AI models.

Publication

Results documented in Rauchwerk Research Substack post exploring collaborative human-machine reading and the potential for new tangible interfaces.

Rauchwerk Research

Rauchwerk Research